Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

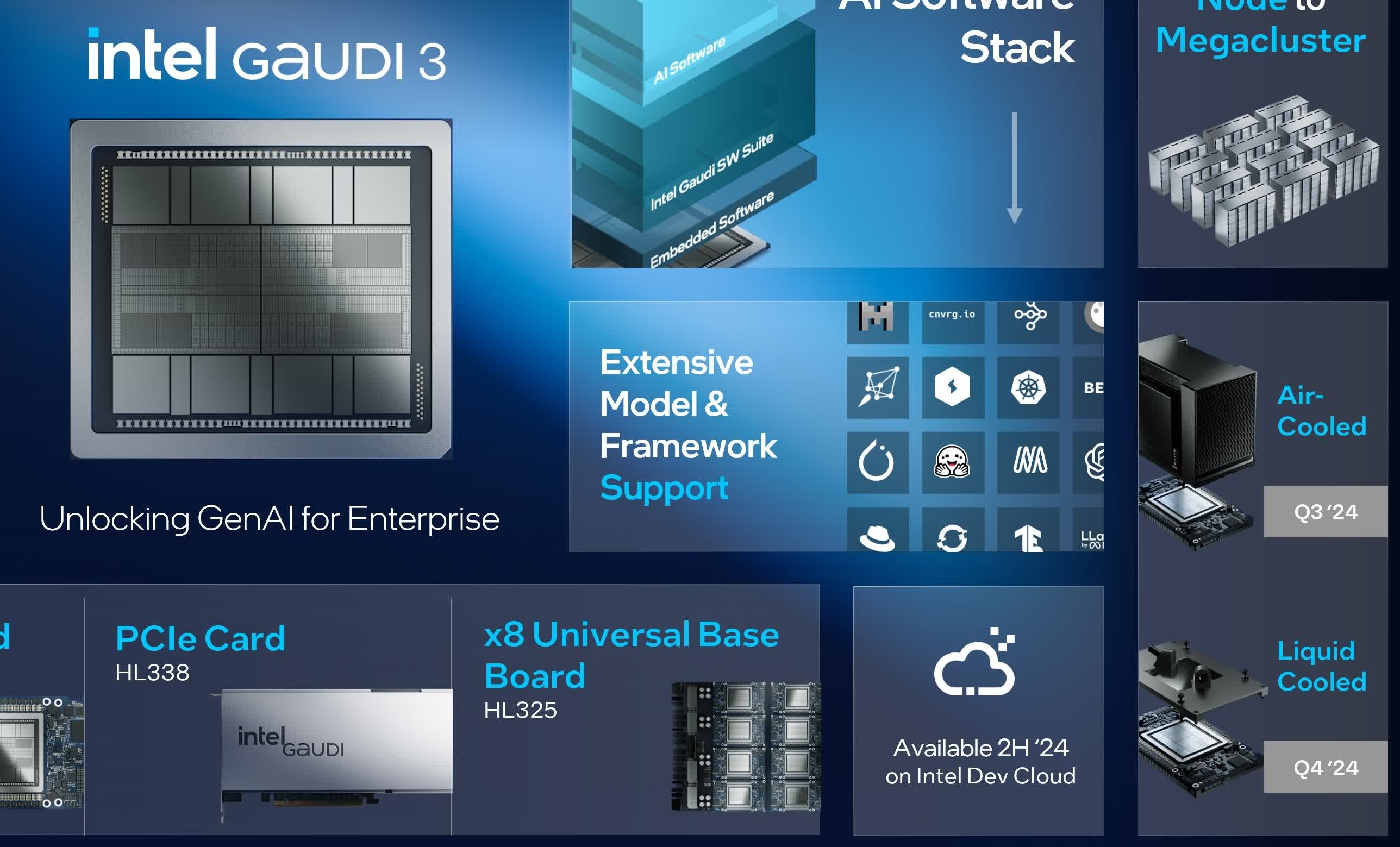

What just happened? Intel threw down the gauntlet against Nvidia in the heated battle for AI hardware supremacy. At Computex this week, CEO Pat Gelsinger unveiled pricing for Intel’s next-gen Gaudi 2 and Gaudi 3 AI accelerator chips, and the numbers look disruptive.

Pricing for products like these is typically kept hidden from the public, but Intel has bucked the trend and provided some official figures. The flagship Gaudi 3 accelerator will cost around $15,000 per unit when purchased individually, which is 50 percent cheaper than Nvidia’s competing H100 data center GPU.

The Gaudi 2, while less powerful, also undercuts Nvidia’s pricing dramatically. A complete 8-chip Gaudi 2 accelerator kit will sell for $65,000 to system vendors. Intel claims that’s just one-third the price of comparable setups from Nvidia and other rivals.

For the Gaudi 3, that same 8-accelerator kit configuration costs $125,000. Intel insists it’s two-thirds cheaper than alternative solutions at that high-end performance tier.

At #Computex2024, Intel CEO @PGelsinger unveiled all new Intel®ï¸Â Xeon®ï¸Â 6 processors, Lunar Lake architecture and 80+ new AI PC designs and standard AI kits including eight Intel® Gaudi® 2 & 3 accelerators. pic.twitter.com/viHlLGQVDd

– Intel India (@IntelIndia) June 7, 2024

To provide some context to Gaudi 3 pricing, Nvidia’s newly launched Blackwell B100 GPU costs around $30,000 per unit. Meanwhile, the high-performance Blackwell CPU+GPU combo, the B200, sells for roughly $70,000.

Of course, pricing is just one part of the equation. Performance and the software ecosystem are equally crucial considerations. On that front, Intel insists the Gaudi 3 keeps pace with or outperforms Nvidia’s H100 across a variety of important AI training and inference workloads.

Benchmarks cited by Intel show the Gaudi 3 delivering up to 40 percent faster training times than the H100 in large 8,192-chip clusters. Even a smaller 64-chip Gaudi 3 setup offers 15 percent higher throughput than the H100 on the popular LLaMA 2 language model, according to the company. For AI inference, Intel claims a 2x speed advantage over the H100 on models like LLaMA and Mistral.

However, while the Gaudi chips leverage open standards like Ethernet for easier deployment, they lack optimizations for Nvidia’s ubiquitous CUDA platform that most AI software relies on today. Convincing enterprises to refactor their code for Gaudi could be tough.

To drive adoption, Intel says it has lined up at least 10 major server vendors – including new Gaudi 3 partners like Asus, Foxconn, Gigabyte, Inventec, Quanta, and Wistron. Familiar names like Dell, HPE, Lenovo, and Supermicro are also on board.

Still, Nvidia is a force to be reckoned with in the data center world. In the final quarter of 2023, they claimed a 73 percent share of the data center processor market, and that number has continued to rise, chipping away at the stakes of both Intel and AMD. The consumer GPU market isn’t all that different, with Nvidia commanding an 88 percent share.

It’s an uphill battle for Intel, but these massive price differences may help close the gap.